Programming Projects

Featured Post

This post summarizes a public machine learning project I worked on in collaboration with Ilana Zane. In our search to better understand machine learning, we ended up building our machine learning framework modeled after TensorFlow, which we call Artifice. All of the code and instructions on how to download the software can be found here.

Posts

As artificial intelligence has gained more attention in the media recently with the recent releases of DALL-E and ChatGPT, more and more people seem to become entranced with the power of machine learning. As someone with a heavy background in physics and mathematics, I find it really amazing how all of these models mostly boil down to some clever linear algebra and calculus, barring the details about the data they’re trained on (for an interesting example of how training data can affect performance in machine learning, see my article on autoencoders). So I figured it would be an interesting exercise to dive into the mathematics of how machines can actually learn, primarily through neural networks, with the hopes of dymstifying machine learning for those inside and outside of the field. This article serves as a companion piece to a joint project I worked on with Ilana Zane called Artifice.

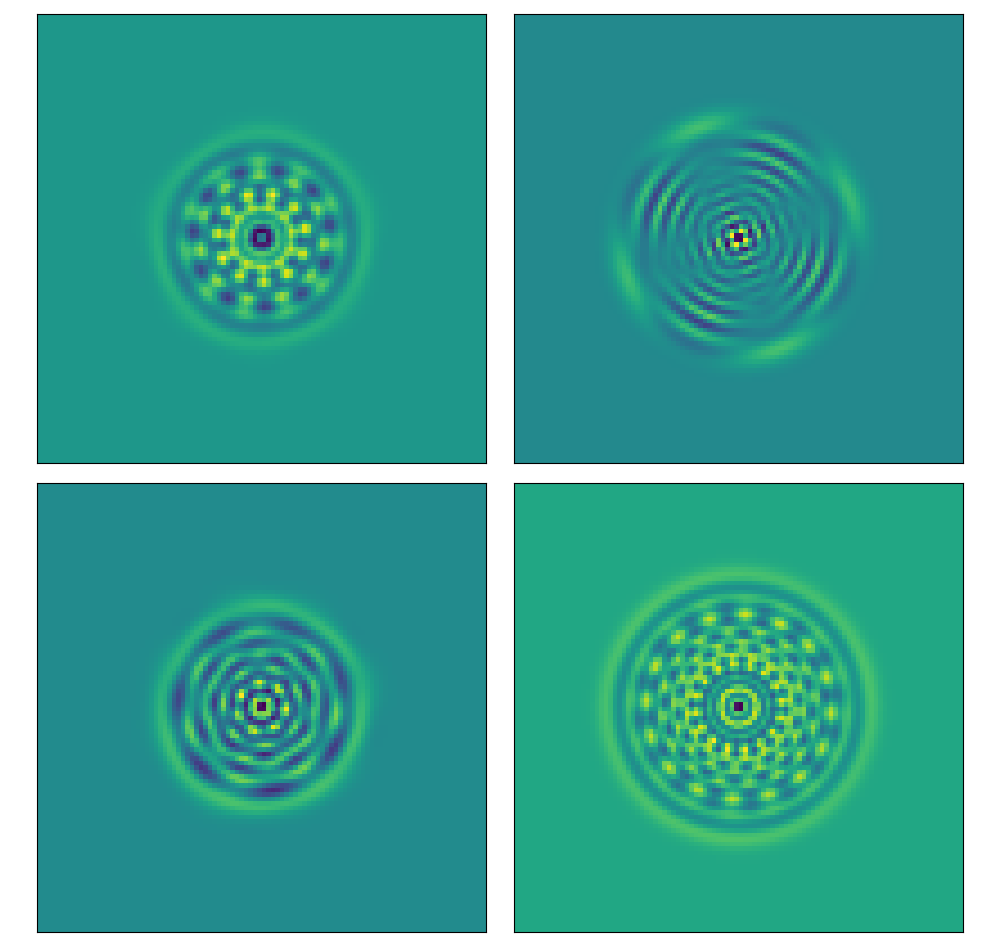

I recently just finished up my Master’s Degree in Physics at Stony Brook University and wanted to summarize some of the research I had done during my time there on Quantum State Tomography (QST). I don’t actually expect many people outside (or even inside for that matter) the field of physics to understand most of the technical details of QST, so the idea of this post is to make my work as accessible as possible to those who are interested in learning more. An in depth summary can be found in my Master’s Thesis, and all of the corresponding code for the project can be found on my Github.

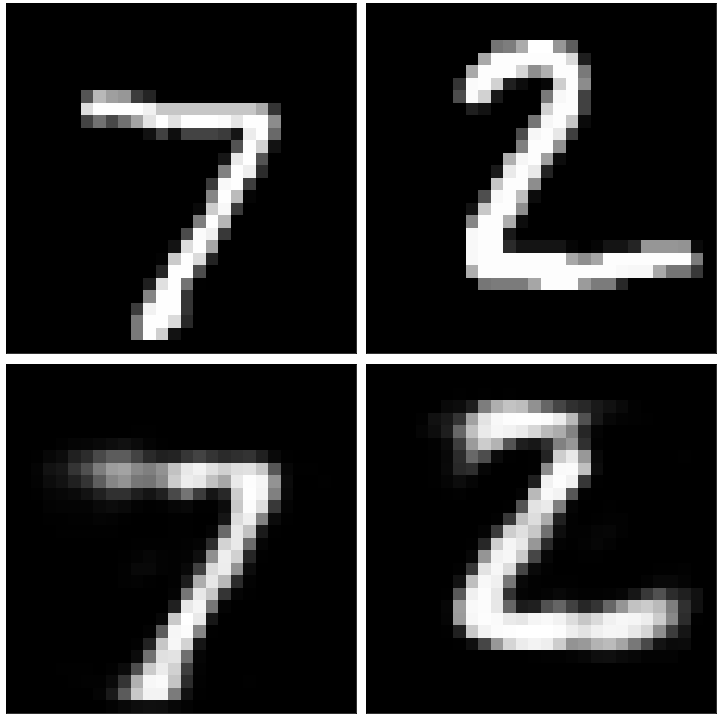

Recently my girlfriend has been learning about autoencoders in a deep learning class she’s taking for her master’s degree and it’s caught my interest. I decided to try my hand at creating some pretty basic autoencoder architectures, utilizing the Keras architechture from Tensorflow in Python. All of the code for the project and generated figures can be found in the Fun_with_Autoencoders Jupyter Notebook located on my Github.